Variational Autoencoders with Missing Data

This is a project in collaboration with Jill-Jênn Vie from Inria-Lille, France.

Autoencoders

Dimensionality Reduction

In some applications like data visulization, data storage or when the dimmensionality of our data is to large, we’d like to reduce its dimmensionality of the data, keeping as much information as possible. So we’d like to construct an encoder that takes the original data and transform it into a latent variable of lower dimmensionality. Some times we’d like to recover the original points (with the minimal error) from their encoded versions. So we need an encoder that takes points from the latent space and transform them into points in the original space.

Original/Greedy Autoencoder

Let’s denote by an observation in the original space and its encoded value. If we denote by the encoder function, then . We can decode through a decoder function , and try to recover the original point from this decoded value. That is, is not necessarily equal to , this is becasue does not necessarily belogs to the original space, then we need one more step to transform into value that belongs to the original space. This final transformation might be done in two different ways, the first and original one is to use a deterministic function that takes decoded value and transform them into the original space, the second one is to take as the parameter of a random variable, and make an observation of this final distribution.

When we model and as neural networks (usually deep neural networks), we get the so-called autoencoder. Where and are learned with some trainig data set and according to some loss functions .

Example

For example, if is an observation of a Bernoulli distribution we could choose as the loglikelihood, where is the parameter of such distribution, that is

where and and are learned with (stochastic) gradient ascent.

Note that while , . Thus, we can take (which would be a deterministic approach) or (which with a random approach), the standard is to take a deterministic approach to go from to .

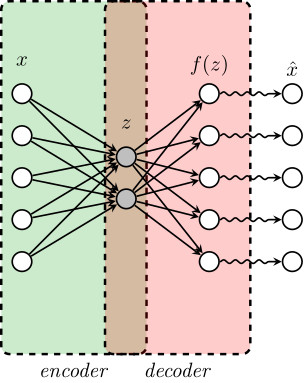

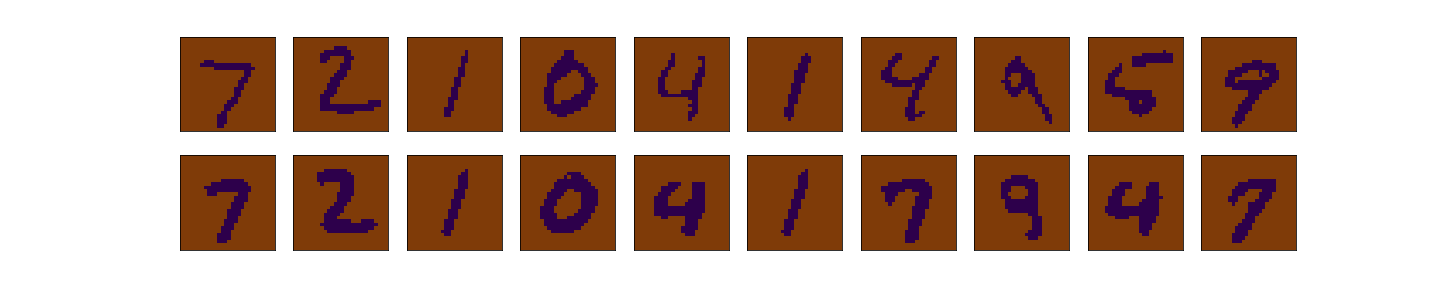

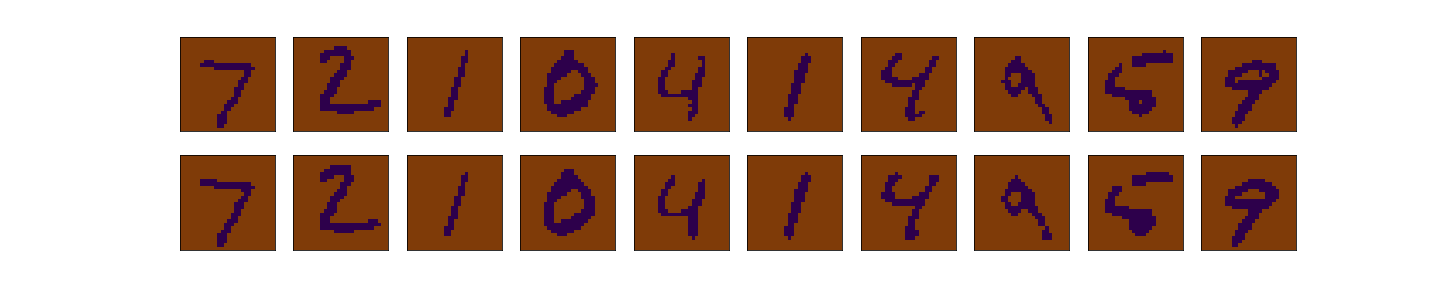

To illustrate this, we consider the MNIST data set, which consist of images of hand-written numbers. The original value of the pixels in each image is between 0 and 1, but we have binarized it, assigning 1 if the value of the pixel is bigger or ueal 0.5 and assigning 0 otherwise. Thus, we can apply the Bernoulli loglikelihood for each pixel. Each image is a 28x28 pixels (thus each image has 784 pixels).The encoder-decoder structure is symmetric, where and are multilayer perceptrons, has two hidden layers of 392 and 192 neurons each layer, and ReLu activation function. The last layer of the decoder has 784 neurons and sigmoid activation function. The latent layer (), has no activation function and we vary the number of nuerons (that is, the dimension of the latent space) in our experiments to be 2 or 98, we denote the dimension of the latent space as .

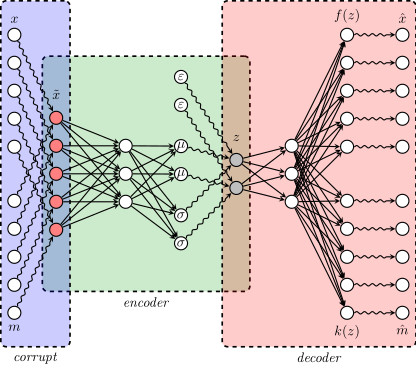

The next figure shows the structure of our autoencoder. We have preserved this same structure in all our experiments.

< img src=“greedy_ae_latent.gif” title=“Evolution of the Latent Space” width=“300”> < img src=“greedy_ae_latent.png” title=“Final Latent Space” width=“300”>

A good introduction to VAEs can be found here